If you are a computer geek who plays Capture the flag and stuff you probably know what this is.

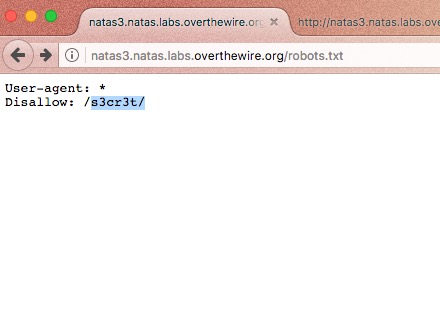

Let's see what is robots.txt.

Have you ever wondered how your search engine crawls through hundreds of web pages in websites and give you exact page which contains the content that you searched? Or what gives instructions to your search engine? In a more clear and accurate way, how the search engine knows which pages he should crawled through. Well this is where robots.txt comes in to play. robots.txt is a text file webmasters create to instruct web robots (typically search engine robots) how to crawl pages on their website.

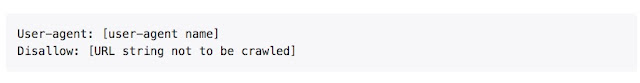

Basic format of the robots.txt file would look like this,

There can be multiple lines of User-agent, Disallow statements in a single robots.txt file.

Syntax

- User-agent : The specific user-agent (web crawler) to which we give instructions.

- Allow : This works only with Googlebot. Says Googlebot can access particular directory or sub directory even the parent directory of that is disallowed.

- Disallowed : Tells not to crawl on the particular URL.

- Sitemap : Used to call out the location of any XML sitemap(s) associated with this URL.

- Crawl-delay : Says how many milliseconds the crawler should wait before crawling through the content

Let's say there is a robots.txt file like this.

User-agent: Googlebot

Disallow: /

This says Googlebot cannot crawl on any page of this particular website.

Requirements

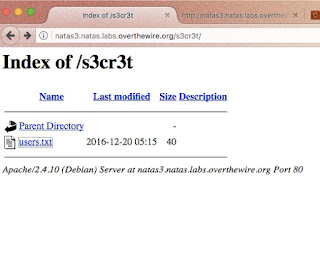

1. robots.txt file should be in the top directory of the website. (eg:- a.com/robots.txt)

2. File name is case sensitive. All in simple.

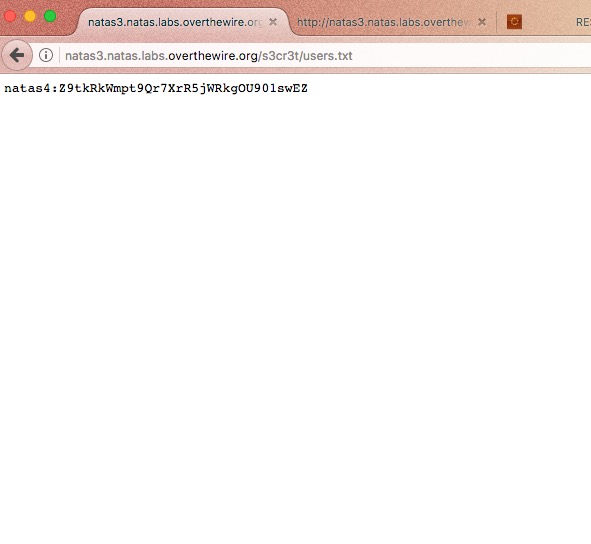

3. robots.txt is not for hiding private information. Any website which has a robots.txt must make it publicly available.

4. If the sub domain changes the robots.txt file should also be changed.

eg - A.com and a.A.com should have two different robots.txt files.

5. Best practice - add sitemap at the bottom of the robots.txt file.